Readability is in crisis. Even the abstracts from studies on readability are virtually incomprehensible. Let’s see what they really say.

What is readability? It’s a measure of how easy a text is to read. For example, how easy is it to read a blog post or white paper you wrote?

Readability is most commonly measured in grade levels. The average person reads in the range of Grade 6 to Grade 8. This might shock you. You’ve had 12 grades of education. Or you’ve had 15 or even 20. But you still read at a Grade 8 level or lower. Yes, you can read at a higher level, but you would do so only when you have to. Why is this?

- You are lazy. That’s no insult; I am, too. We all are.

- You are pressed for time. The more complex the text, the longer it takes to read.

- You want to understand what you read. The more complex the text, the more passes you by (especially if you are lazy and pressed for time, like the rest of us).

Your readers are just like you and me. They are lazy. They are pressed for time. And they often miss important details. If you want them to read your text, to understand your text and to take action, you have to make it easy to read.

There are several studies out on readability. These should help you better understand the subject. They should…but they don’t. Why? Because even their abstracts (summaries) are written at ridiculous grade levels.

Yes, studies on readability are hardly even readable.

For your education and just for fun, let’s edit three abstracts about readability right now. Let’s make them more readable. We will use Readable.io to measure readability, both before and after our edits. See if you can tell the difference.

STUDY #1: “Can Readability Formulas Be Used to Successfully Gauge Difficulty of Reading Materials?”

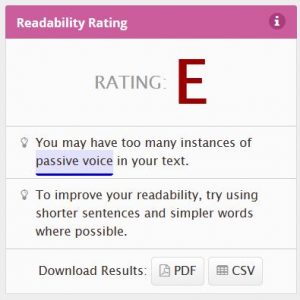

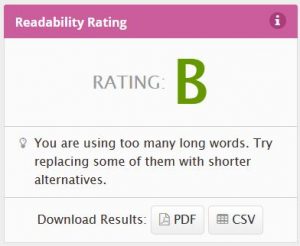

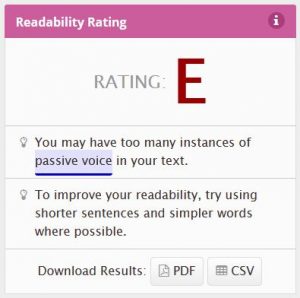

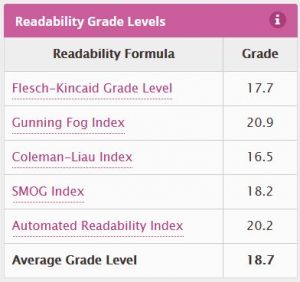

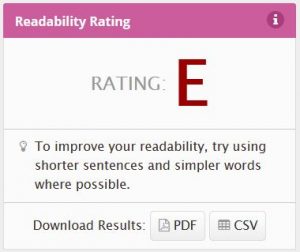

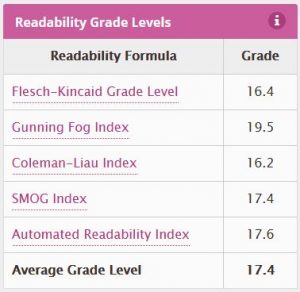

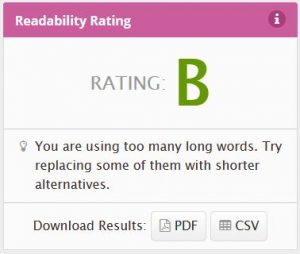

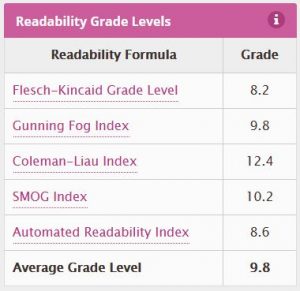

Here is the abstract before editing. This impenetrable wall of text is at a Grade 19 level, as these measures show.

Abstract: A grade level of reading material is commonly estimated using one or more readability formulas, which purport to measure text difficulty based on specified text characteristics. However, there is limited direction for teachers and publishers regarding which readability formulas (if any) are appropriate indicators of actual text difficulty. Because oral reading fluency (ORF) is considered one primary indicator of an elementary aged student’s overall reading ability, the purpose of this study was to assess the link between leveled reading passages and students’ actual ORF rates. ORF rates of 360 elementary-aged students were used to determine whether reading passages at varying grade levels are, as would be predicted by readability levels, more or less difficult for students to read. Results showed that a small number of readability formulas were fairly good indicators of text, but this was only true at particular grade levels. Additionally, most of the readability formulas were more accurate for higher ability readers. One implication of the findings suggests that teachers should be cautious when making instructional decisions based on purported “grade-leveled” text, and educational researchers and practitioners should strive to assess difficulty of text materials beyond simply using a readability formula.

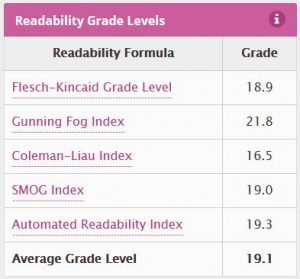

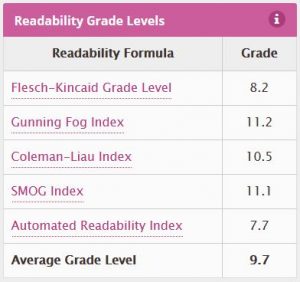

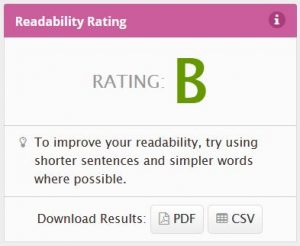

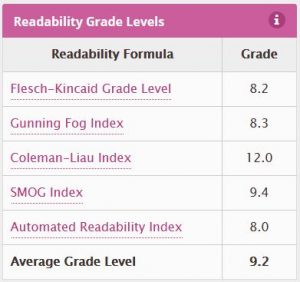

Did you get all that? Crystal clear? No? Well, here’s our edit, at an average Grade 9.7 level (or 8.2 on the most popular Flesch-Kincaid scale). Maybe this will help.

Abstract: We use at least one readability formula to measure the grade level of texts. These formulas use specific attributes to predict how hard the text is to read.

But teachers and publishers don’t know which formulas (if any) are most accurate.

Oral reading fluency (ORF) is a good sign of an elementary aged student’s reading skill. The goal of this study was to compare:

- predicted grade level of texts

- students’ actual ORF rates

We gathered the ORF rates of 360 elementary-aged students. We used these to predict how hard it would be for them to read some texts. We compared these results with the readability levels measured by several formulas.

The results show that a few formulas grade the texts fairly well. But this is true only at some grade levels. Most of the readability formulas were more accurate for more skilled readers.

The results suggest that teachers should be cautious when using grade level readability formulas to make decisions about texts. The results also suggest that teachers and educational researchers should not rely solely on such formulas to assess how difficult a text is.

Did you understand the summary of the research better the second time? We used a few tricks, which you’ll find on our plain language writing page. Ready for another unreadable abstract?

STUDY #2: Reading between the vines: analyzing the readability of consumer brand wine web sites

This one is a little tastier, but it’s still about readability. Here is the abstract before editing. It is at a Grade 18.7 level, although the popular Flesch-Kincaid formula gives it a grade level better.

– The findings suggest that, while certain target demographics may be assumed by grouping wine brand web sites based on readability measures, there are marked differences in readability across wine web sites of a similar nature that only serves to reinforce consumer confusion, rather than help remove it.

This is for two reasons: less sophisticated consumers will not respond to wine marketing messages they cannot understand, and more sophisticated wine drinkers will react more positively to messages that are clear and well‐written. Readability is equally important for these more sophisticated consumers.

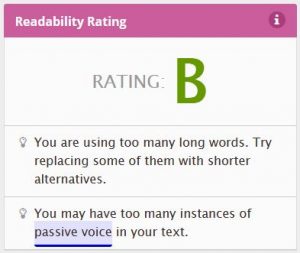

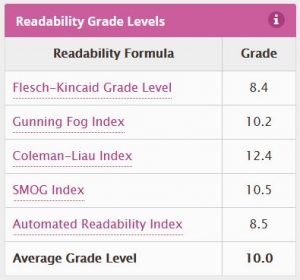

OK, here’s our edit, at a Grade 10 level.

Wine brand websites have different readability levels. We can group those sites based on those levels. For each group, we can assume who their target market is. However, this study finds that even similar wine websites have different readability levels. This confuses consumers, rather than helping them.

This is for two reasons:

- Less sophisticate wine buyers don’t respond to messages they don’t understand.

- More sophisticated wine buyers don’t respond to unclear and poorly written messages, either.

The bottom line: readability is just as important for high-end wine buyers.

Better? No? Our edits brought it up to a Grade 10 readability, and up to Grade 8.4 for Flesch-Kincaid. But that might not be enough in this case.

Perhaps that first paragraph is still a little fuzzy. Frankly, I had to read it several times to understand it. The plain language edit above is not enough to fix that first paragraph. That’s for one simple reason. It’s not just the words that are unclear. The message is just as unclear.

So, we’ll edit it one more time. No, we’ll rewrite it, so that you can understand what it really means. Or at least what we think it means after reading it so many times. This rewrite is at a Grade 9 readability level.

Some wine brand websites are easy to read. They have low grade level readability measures. We can assume these websites target beginner wine-buyers.

Other websites are harder to read. They have high grade-level language. We can assume these websites target sophisticated wine-buyers.

But we found that readability levels vary widely at similar wine websites. They vary widely at websites that target sophisticated buyers. They also vary widely at wine websites that target beginner buyers. The study reveals that people get confused by these erratic readability levels.

This is for two reasons:

- Beginner wine buyers don’t respond to messages they don’t understand.

- Sophisticated wine buyers don’t respond to unclear and poorly written messages, either.

The bottom line: readability is just as important for high-end wine buyers.

We were going for clarity here, not to improve grade-level readability. However, in so doing, we have slightly improved the readability score to 9.2, and 8.2 on Flesch-Kincaid.

Shall we edit another readability study abstract?

STUDY #3: Do People Comprehend Legal Language in Wills?

Here’s the third study’s abstract. It is longer than the other two. It is written so clearly that you might even understand it. Even so, it measures Grade 17 level. That’s better than the others, but not by much.

Summary: This study assessed the ability of laypeople to understand a document that most have read and signed: a last will and testament. We focused on concepts that are frequently included in wills, examined whether understanding can be enhanced by psycholinguistic revisions, and assessed comprehension as a function of age. Participants ages 32 to 89 years read will-related concepts in (i) their traditional format, (ii) a version revised to increase readability, or (iii) a version in which, in addition to those changes, we explained archaic and legal terms. Results showed that increasing the readability and explaining terms enhanced participants’ abilities to apply will-related concepts to novel fact patterns and to explain their reasoning. We found no age-related effects on comprehension, consistent with well-documented findings that processing at the situation level of text comprehension is preserved in older adults. We discuss the implications of these findings and suggest ideas for further research.

This study showed that people have significant difficulty understanding the concepts described in traditional, boilerplate wills. When asked to apply a will-related concept to a novel fact pattern, participants who read boilerplate excerpts were able to do this correctly less than 60% of the time (when the guessing rate was 50%). Importantly, many attorneys rely on these boilerplate templates when they draft documents and may not explain essential concepts to their clients in language that is accessible to those clients. As a result, sizeable numbers of individuals may have wills that contain language they do not understand.

Results also showed that comprehension can be enhanced by carefully revising the syntax and by providing explanations of complex terms. Merely increasing readability by removing archaic terms and simplifying the syntax of will excerpts may be necessary but not sufficient. Like Masson and Waldron (1994), we found that only when readability was increased via syntactic changes and when terms were explained did participants show significant improvement in their ability to apply and explain these concepts. Apparently, both syntactic simplification and lexical clarification are prerequisites to enhanced understanding. By shortening sentences and removing passive constructions, we enabled participants to form and maintain a more coherent representation of the core concept in a passage, and by explaining unfamiliar terminology, we made the concepts more accessible to these legally untrained readers

Now, here is our edit at a Grade 9.8 level. This abstract is somewhat clearer than the others. In fact, there are many sentences that we did not even edit. But we still brought the grade level down quite a bit.

Also note that we did not edit “situation level”, because we don’t know what that means. In other words, that term should be replaced by something that people will understand.

Summary: This study assesses how well people understand a popular document. Most have read and signed a last will and testament. We focused on concepts that are often in wills. We tested psycholinguistic edits to see if people would understand them better. We assessed how age affects comprehension.

People ages 32 to 89 years read will-related concepts in:

- their traditional format

- an edited version to increase readability

- an edited version to increase readability, in which we also explained archaic and legal terms

The results show that people understand will-related concepts better when readability is improved and terms are explained. They apply those concepts better to new situations. And they can explain their reasoning. We found no age-related effects on comprehension. This confirms well-documented findings that older people process text as well as younger people at the situation level.

We discuss what these findings mean and suggest ideas for further research.

This study shows that people have a hard time understanding concepts in standard, boilerplate wills. We asked people to apply a will-related concept to a new situation. Those who read boilerplate excerpts were able to do this correctly less than 60% of the time. The guessing rate was 50%.

Many lawyers rely on boilerplate templates to draft wills. They might not explain key concepts to their clients in language they can understand. So, many people might not understand their wills.

The results also show that people understand simpler syntax better. And they understand better when complex and archaic terms are explained. Our results confirm those of Masson and Waldron (1994). They found that people could apply and explain these concepts better only when:

- terms were explained

- readability increased via syntax changes

Apparently, both simpler syntax and explaining terms are needed to better understand.

But these increases in readability might not be enough. We next shortened sentences and removed the passive voice. Then people understood and retained the main ideas better. And by explaining unfamiliar terms, we helped these legally untrained readers grasp the ideas.

The edited version is more readable, although the improvements are not as big as with the other two abstracts. This one was more readable to begin with.

It seems ironic to have to edit an abstract on readability to make it readable. Such is how far we have fallen off the cliff of effective communication.

Now it’s time to look at your articles, your letters, your blog posts, your brochures and your websites. Are they as clear as they could be? Are you losing customers and readers because some find your writing too hard to read?

If so, it’s time for an edit.

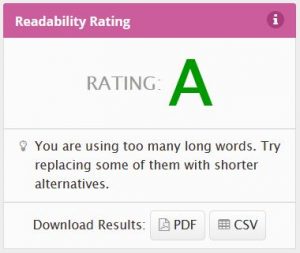

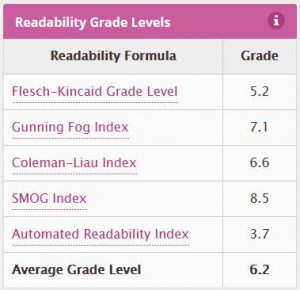

By the way, were you wondering what this blog post’s grade level is? It’s Grade 6.2. That’s all the words before, after and between the abstracts.

Hi Dave,

What an amazing information!

Readability is one of the main aspects we need to look into while making contents.

This comprehensive analysis is really wonderful. Thanks for bringing out those 3 abstracts about readability.

Worth notable abstracts.

indeed I surprised to note the statement you made: “The average person reads in the range of Grade 6 to Grade 8.” Yes, that really shocked me!

Thanks Dave for sharing that wonderful readability checking tool

Keep sharing.

Have a great time of sharing and caring ahead

Best

~ Phil

Wow, what a comprehensive outline of the different levels of Readability!

I started off my career blogging not really paying much attention to Readability. That is not to say my content wasn’t good. But, I discovered i was writing OVER my audiences head.

I soon learned however that readability, like you said, is one of the most important aspects we need to consider when writing.

Thanks for the break down. It’s worth another read 🙂